AI Regulation in Europe

The European Union (EU) has established itself as a global pioneer in Artificial Intelligence (AI) regulation with the approval of the AI Regulation, also known as the AI Act (Regulation (EU) 2024/1689). This historic legislation, the first of its kind in the world, aims to create a comprehensive regulatory framework for AI, balancing innovation with fundamental rights protection.

Main Aspects of the AI Act

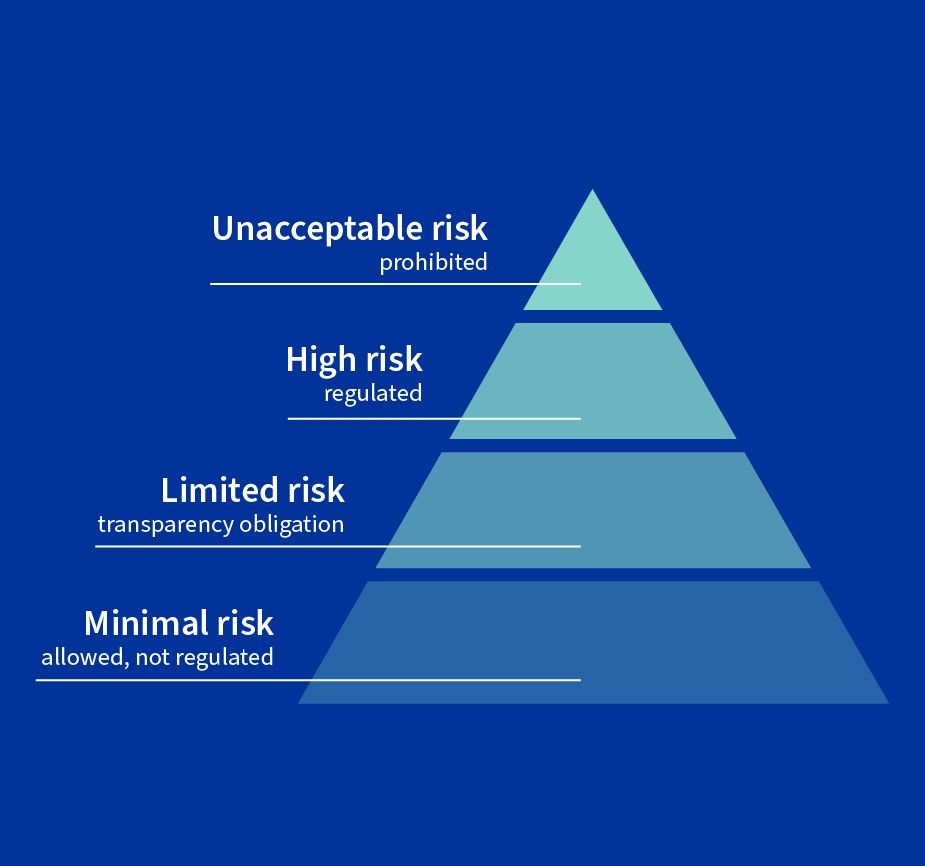

Risk-based approach: The legislation categorizes AI systems according to their potential risk level, imposing stricter obligations for systems considered high-risk.

Transparency and accountability: Requires AI systems to be transparent, especially in interactions with humans, such as in the case of chatbots.

Specific prohibitions: Certain AI practices considered unacceptable are prohibited, such as social scoring systems and real-time biometric surveillance in public spaces, with limited exceptions.

Obligations for developers and implementers: Establishes clear requirements for creators and users of AI systems, especially for high-risk applications.

Implementation and Deadlines

The AI Act entered into force on August 1st, 2024, with a staggered timeline for full implementation:

- Prohibitions take effect after 6 months

- Governance rules and obligations for general-purpose AI models after 12 months

- Full law application after 2 years, with the exception of rules for AI systems incorporated in regulated products, which will have 36 months

Global Impact

While focused on the EU, the AI Act will have a global impact.

Companies outside the EU using European customer data in their AI platforms will need to comply. Other countries and regions are expected to use this law as a model, similar to what happened with GDPR.

The implementation of the AI Act presents challenges, especially for small and medium-sized enterprises. However, it also creates opportunities for Europe to position itself as a leader in ethical and trustworthy AI.

The EU seeks, through this legislation, to establish a global standard for responsible AI development and use, promoting innovation while protecting fundamental rights and democratic values.

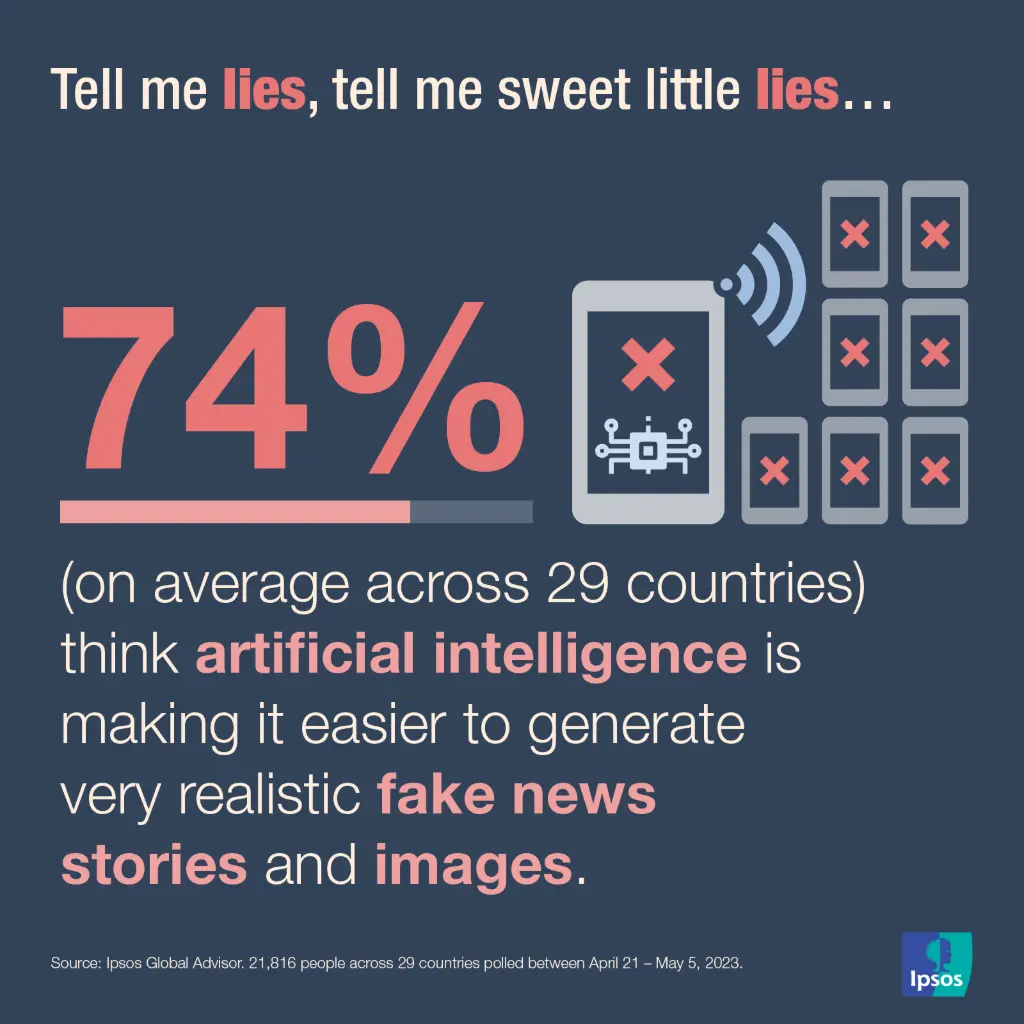

How the EU AI Act Addresses Disinformation and Fake News

The European Union (EU) AI Act addresses the issue of disinformation and fake news in various ways, recognizing AI's potential to both create and combat this problem.

Here are the key aspects:

Regulation of AI-Generated Content

Mandatory transparency: The law requires AI systems to be transparent, especially in interactions with humans. This applies particularly to AI-generated content, such as deepfakes.

AI content labeling: Developers of AI systems that create deepfakes are required to clearly disclose that the content was created or artificially manipulated, labeling it as such and revealing its artificial origin.

Combating Disinformation

AI use for detection: The law encourages the use of advanced AI technologies to analyze patterns, language use, and context, assisting in content moderation, fact-checking, and false information detection.

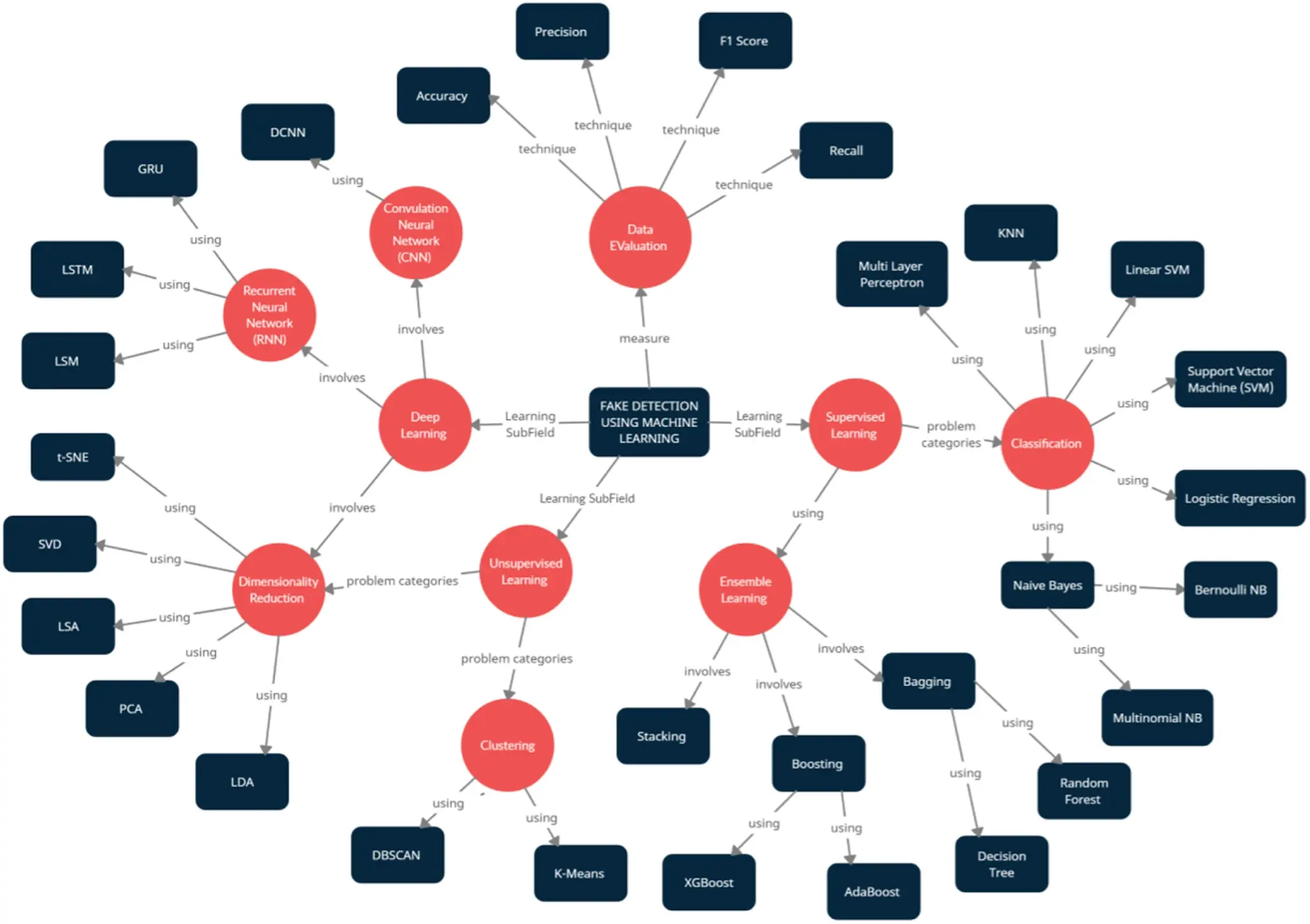

Concept map outlining the various techniques used for detecting fake news detection, as proposed by Varma et al. (2021).

Collaboration with platforms: The European Commission requested that large technology platforms, such as Google, Facebook, YouTube, and TikTok, detect and clearly label AI-generated photos, videos, and texts.

Penalties and Compliance

Significant fines: The law establishes severe penalties for non-compliance, with fines of up to 35 million euros or 7% of global annual turnover for prohibited AI uses.

Obligations for developers: Imposes clear requirements for creators and users of AI systems, especially for applications considered high-risk.

Comprehensive Approach

Code of Practice: The EU strengthened the Code of Practice on Disinformation, which brings together a wide range of actors committed to a set of voluntary commitments to combat disinformation.

European Digital Media Observatory (EDMO): Established an independent observatory that brings together fact-checkers and academic researchers with expertise in online disinformation.

The EU AI Act seeks to balance technological innovation with the protection of fundamental rights and democratic values, recognizing AI's dual role in creating and combating disinformation. By imposing strict regulations and promoting collaboration between different sectors, the EU aims to create a more trustworthy and resilient digital environment against the spread of fake news and disinformation.

How the EU AI Act Addresses the Use of Artificial Intelligence in Political Campaigns

Illustration: Yù Yù Blue

The European Union AI Act addresses the use of artificial intelligence in political campaigns significantly, recognizing the potential impact these systems can have on democratic processes. Here are the main aspects:

High-Risk Classification

Artificial intelligence systems capable of influencing voters in political campaigns were added to the list of AI applications considered high-risk.

This classification implies that such systems will be subject to more rigorous regulations and intense supervision.

Specific Requirements

Transparency: AI systems used in political campaigns must comply with additional transparency requirements. This includes clear disclosure that the content was generated by an AI algorithm.

Prevention of illegal content: AI models must be designed to prevent the generation of illegal content, which is particularly relevant in the context of political campaigns where disinformation can be a concern.

Citizens' rights: The law reinforces citizens' right to file complaints about AI systems and to receive explanations about decisions based on high-risk AI systems that significantly impact their rights.

Risk-Based Approach

The legislation adopts a risk-based approach, establishing specific obligations for AI system providers and users, depending on the level of risk the AI can generate. In the case of political campaigns, where the potential for influence is considerable, more rigorous obligations are expected.

Supervision and Compliance

Organizations using AI in political campaigns will need to assess the Regulation's impact on their activities and business models. This may include identifying processes that require changes to comply with new regulatory obligations.

The EU AI Act thus seeks to balance innovative technology use in political campaigns with protecting the integrity of democratic processes, ensuring transparency, preventing abuses, and protecting citizens' rights in the electoral context.

What are the Criteria for Classifying an AI System as High-Risk

The European Union AI Act classifies AI systems as high-risk based on specific criteria:

- Impact on fundamental rights: Systems that can significantly affect fundamental rights, such as health and safety, are considered high-risk.

- Application areas: Includes critical infrastructure, education, employment, essential services, law enforcement, migration management, and justice administration.

- Intensity and probability of harm: Assesses the severity and likelihood of damage that the system can cause.

These systems must comply with rigorous requirements of transparency, human supervision, and technical robustness before being used.

Sources and citations:

[PDF] The Artificial Intelligence Regulation - SRS Legal

[PDF] 1 Regulation of Artificial Intelligence in the European Union

AI content: EU asks Big Tech to tackle disinformation - DW

Tackling online disinformation | Shaping Europe's digital future

Long awaited EU AI Act becomes law after publication in the EU’s Official Journal | White & Case LLP

How AI can also be used to combat online disinformation

AI Act | Shaping Europe's digital future - Europa.eu

EU publishes its AI Act: Key steps for organizations | DLA Piper

EU Artificial Intelligence Act (Regulation (EU) 2024/1689) - Updates